Imagine the scene: a dog crosses the street while you are driving home. You violently swerve the car, hitting a tree. The airbag explodes, the safety belt keeps you in place, and you end up with only a few scratches — albeit a written-off car. You are probably among the many people who would instantly take the risk of an accident to avoid hitting a dog. Even more so if it was a child!

However, there are many situations in which a person might not take that risk for a dog. For example, a driver of a tanker truck filled with fuel would not risk an explosion. Or a mother with her child in the back seat. The active decision to potentially injure someone or something we care for is not an easy one, and can evolve into an entangled network of moral and ethical relationships.

Car-bot, follow that taxi!

Thought experiments such as these raise the question: ‘Would you allow cars to be driven only by computers?’ I would say probably not, given a computer doesn’t possess emotions of empathy or love, nor does it understand moral and social codes — things humans have an innate ability to learn from very early childhood. Perhaps you would let the computer assist you in driving the car, such as allowing it to take control if you got distracted or fell asleep. This question has already been addressed by the aviation industry, where computers have full control over flying an airplane — until a human, with all the expertise in flying and moral feeling, decides to take control.

Much like the aviation industry, medicine also embraced Artificial Intelligence (AI) with Computer Aided Diagnosis (CAD). Given a medical image, CAD software highlights possible zones of interest for the radiologist, who then makes the diagnosis. CAD software is getting more sophisticated by the day, and will no doubt be embedded elsewhere in our lives a few years from now. It’s not too extreme to imagine that in the future your shirt might report your ECG, your shoes the amount of physical activity you undertake, or a central artificial brain could raise an alarm if you’re having a heart attack while jogging. Still, you don’t want a computer to make a cancer diagnosis and prescribe you chemotherapy — doctors are going to be empowered by AI, not substituted by it.

This is exactly the route we are taking in developing Your.MD, the world’s first AI Personal Health Assistant. The first problem we addressed was providing help to people who cannot get immediate help from a doctor, but are in need of medical advice. Googling symptoms is not for the faint-hearted. Still, the search engine is the most common resource for medical information for today’s connected humanity. We therefore built a robust symptom checker, which asks pertinent questions in order to reach a conclusion about the user’s condition. The advantage of an AI-powered symptom checker is that it can make you stop running before a possible heart attack, when no doctor is around. But, as shown below, we did not take the Deep Learning route for our diagnoses… and for a good reason.

“Dear Chatbot, I am not feeling well.”

Given our chat-based interface, an obvious question was: ‘Shall we build an artificial doctor which talks like a real doctor?” A medical chatbot that mimics real doctors but, in reality, doesn’t possess any of the basic understandings of what a human being is.

The question is real today, because Deep Learning algorithms — a subset of Neural Networks, a family of algorithms that mimic the flow of information in the brain — can be used to create chatbots (chatting robots) of unheard realism.

In June 2015, two Google scientists published an article entitled, “A Neural Conversational Model” containing a chatbot’s interaction with an unaware employee discussing a technical support query. The chatbot had been trained with IT help-desk conversations and then used as an artificial problem solver:

Context: Describe your problem: “I am having issues accessing vpn”

Machine: Hi

Human: hello

Machine: could you please let me know what are the operating systems you are using?

Human: i am using linux

Machine: ok it was in the ssl vpn on which you stated it was broken. Please check if you are logged in to corp network. What is the error that you are running please?

Human: i am seeing an error related to vpn

Machine: what is the error message? …. Etc. etc.

In case you’re curious, the chatbot solved the problem.

Considering that the technology behind the chatbot is available to anyone, and that Google is at the frontier of this development, one question comes to mind: ‘Why doesn’t Google use it for a Siri-like product?’ They actually could, since the main difficulty in building such products is finding training sets, i.e. millions of previous conversations the chatbot can learn from. This is unlikely to be a problem for the biggest search engine in the world.

Google can always answer but never ask

Still, Google is pushing for Google Now, or Google Inbox, both of which make suggestions to the user, without pretending to be human. Though, as yet, Google hasn’t come out with a powerful AskGoogle chatbot. Is the company unable to use its own Neural Networks to “organise the world’s information”? Clearly not, Google is a hungry user and developer of new Deep Learning algorithms. This is evident in the recent buzz surrounding the AlphaGo success story.

Vincent Vanhoucke, Principal Scientist at Google, makes that clear: “People are finding Deep Learning a much better tool for resolving problems like …understanding documents, and ranking them for search”. He adds: “Facebook, Baidu, Microsoft and Google are all using Deep Learning in their product, and pushing the research forward.” He also indicates three macro areas where Deep Learning has been used recently: speech recognition, computer vision and machine translation. Tellingly, Mr Vanhoucke does not indicate “hyper-realistic chatbots” as the main application for Deep Learning.

So, if Google doesn’t create a realistic chatbot for its service, why should we? At Your.MD we use Deep Learning, together with other machine learning techniques, for Natural Language Processing (NLP) — which we rely heavily on to understand our users’ needs better and better. However, for the moment, our main product — the most advanced symptom checker in the world — is based on totally different technologies.

Correlation is not Causation

We believe that a thorough knowledge and comprehension of the mechanics that underpin health problems is necessary to deal with people sharing their medical issues with us.

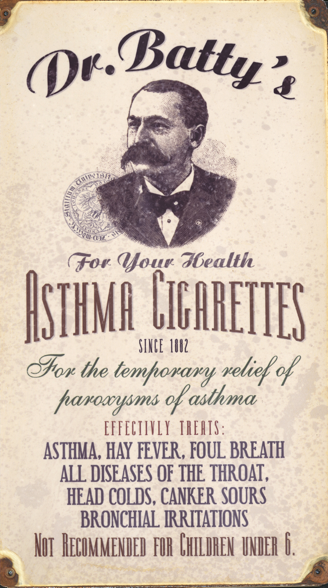

Correlation is not causation, say scientists. Smoking has not been recognised as a culprit of lung cancer until the causes underlying the activation of cancer by substances contained in smoke have been explained.

This presumed ‘innocence’ might seem more attributable to economic interests than scientific honesty, but take a look at the last World Happiness Report published by the Earth Institute of Columbia University. This year the report confirms “a worldwide negative relationship between parenthood and life satisfaction”. This means people without offspring tend to have a higher level of life satisfaction than parents do. Given such data, any Deep Learning algorithm would advise would-be parents not to have children. Unless imposed on it, no software can understand the value of human life — a very subjective value for us humans.

For these reasons, Your.MD’s symptom checker is based primarily on doctors’ knowledge. Doctors decide which factors might worsen asthma, for instance — smoking. Doctors, looking at scientific publications tell the software the incidence of conditions and the possible symptoms related to it.

If doctors provide such data, what remains to be done? Well, crunching numbers — something computers are very good at, and human brains are not.

“Render unto the computer what is the computer’s”

Most people cannot ‘compute’ the question: “Given that a test gives positive results also in the absence of a condition (false positive) 5% of the time, what is the probability of being ill when getting positive results from such a test?” Statisticians know that a parameter is missing (the incidence of the condition), but computers can determine the results for networks of relationships of cause and effect that are much more complex than the one above — where doctors, and most other humans, would fail miserably.

In the health sector in particular, relying too heavily on correlation and too little on causality could be a fatal mistake for both companies and users alike. To paraphrase Immanuel Kant, computers can help us study the sky above us, but will never understand the moral law inside us.

It’s for this reason robots will never takeover. But they will be so heavily woven into the fabric of our lives that it’ll be inconceivable to think how we once ever lived without such sophisticated virtual assistants.

Author:

Mario Alemi, Partner, elegans.io